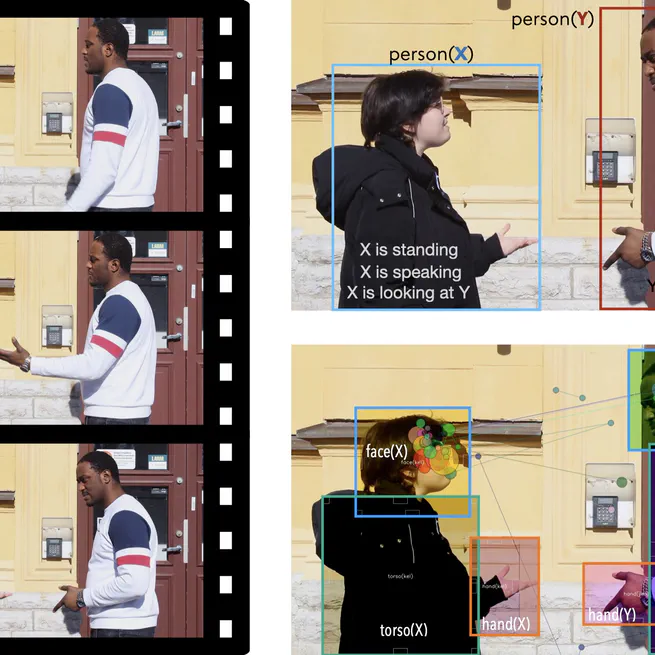

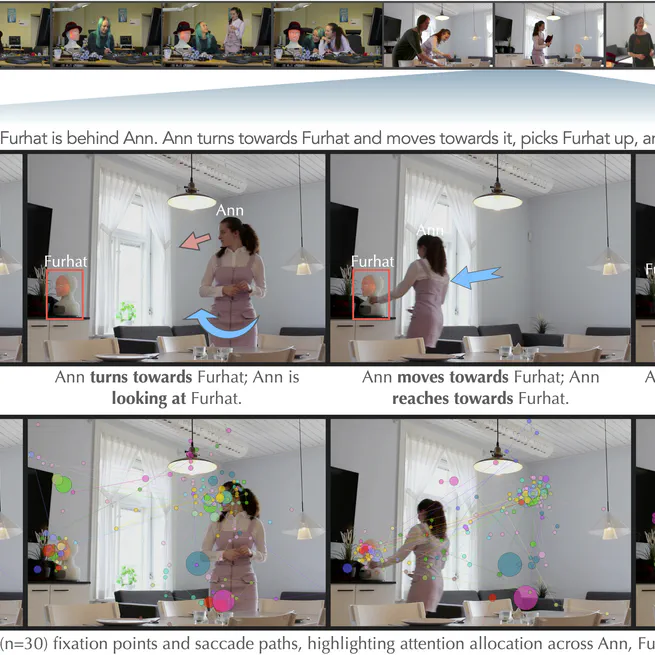

This naturalistic multimodal dataset captures 27 everyday interaction scenarios with detailed visuo-spatial & auditory cue annotations and eye-tracking data, offering rich insights for research in human interaction, attention modeling, and cognitive media studies.

May 1, 2025

We explore how cues like gaze, speech, and motion guide visual attention in observing everyday interactions, revealing cross-modal patterns through eye-tracking data and structured event analysis.

January 30, 2025

This doctoral thesis examines how visuospatial features both low- (e.g., kinematics) and high-level (e.g., gaze, speech) shape perception and attention in human interactions, combining cognitive science and computational modeling to inform human-centric technologies.

May 24, 2024

A multimodal analysis of visuo-auditory & spatial cues and their role in guiding human attention during real-world social interactions.

April 1, 2024