The Observer Lens: Characterizing Visuospatial Features in Multimodal Interactions

This doctoral thesis examines how visuospatial features both low- (e.g., kinematics) and high-level (e.g., gaze, speech) shape perception and attention in human interactions, combining cognitive science and computational modeling to inform human-centric technologies.

May 24, 2024

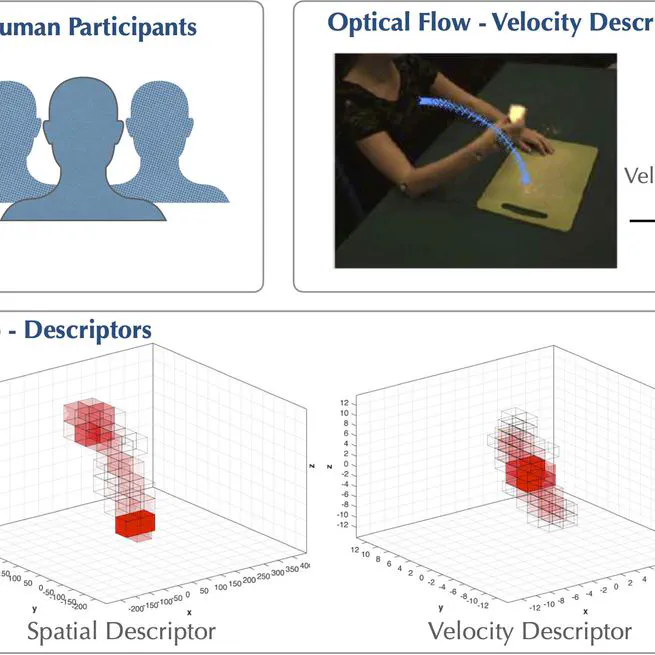

Kinematic Primitives in Action Similarity Judgments: A Human-Centered Computational Model

This study compares human and computational judgments of action similarity, revealing that both rely heavily on kinematic features like velocity and spatial cues and that humans don't rely much on action semantics.

January 30, 2023

DREAM Data Pipeline

Python-based multimodal data processing for the EU Horizon 2020 DREAM project on robot-assisted autism therapy.

March 31, 2019