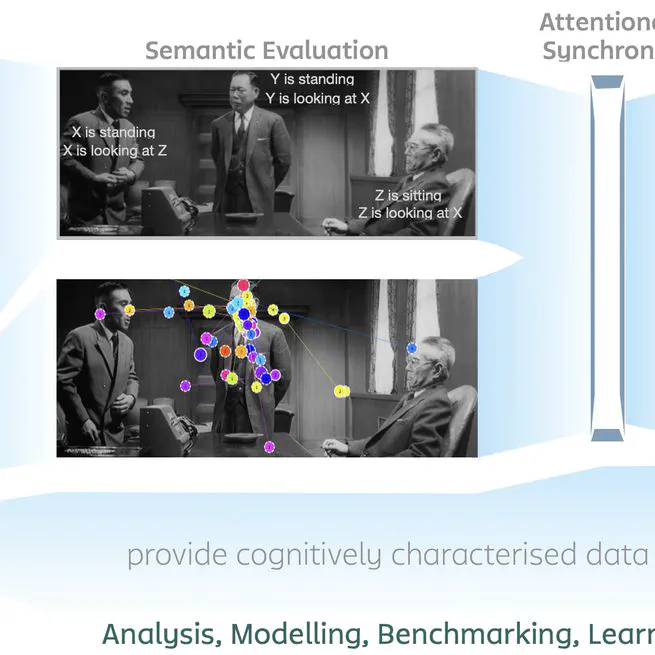

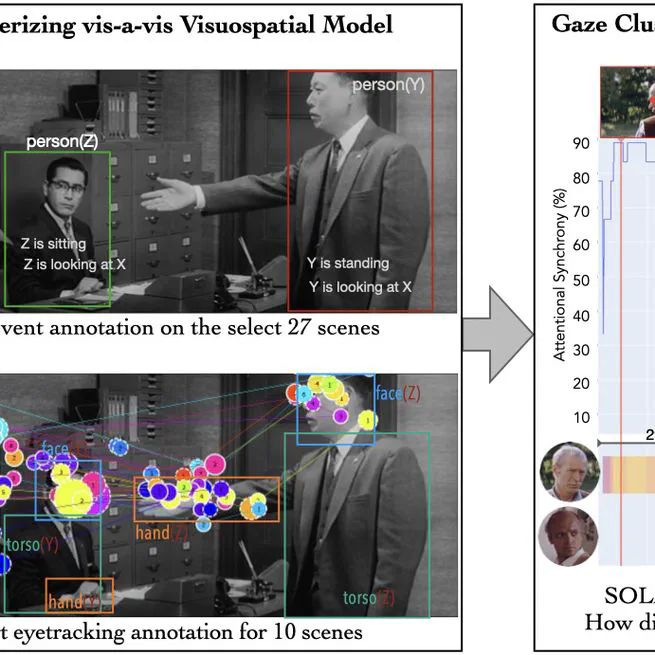

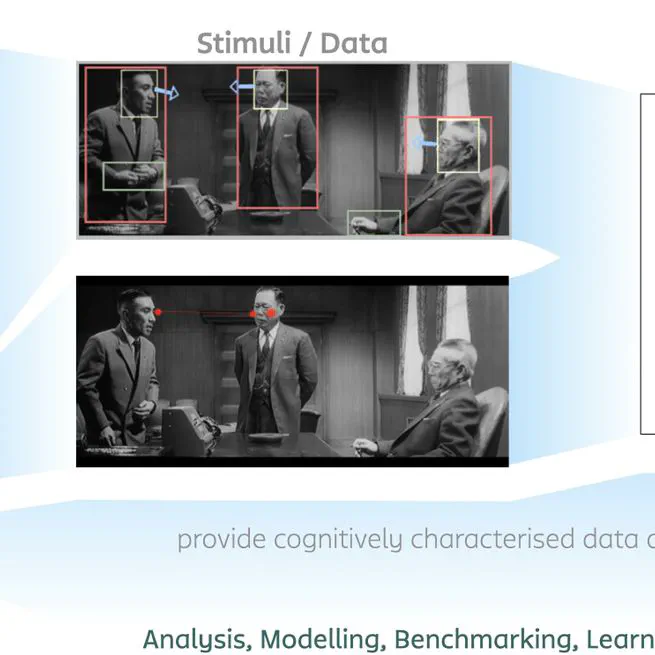

This study analyzes how visuospatial features in film scenes shape attentional synchrony, using eye-tracking data to reveal how scene complexity guides visual attention during event perception.

September 22, 2022

This study explores how visuospatial features in film scenes—like occlusion or movement—relate to anticipatory gaze and event segmentation, using eye-tracking data and multimodal analysis to uncover patterns in human event understanding.

June 1, 2022

This research develops a conceptual cognitive model to examine how multimodal cues—like gaze, motion, and speech—influence event segmentation and prediction in narrative media, using detailed scene analysis and eye-tracking data from naturalistic movie viewing.

September 17, 2021