Consulting & Research Services

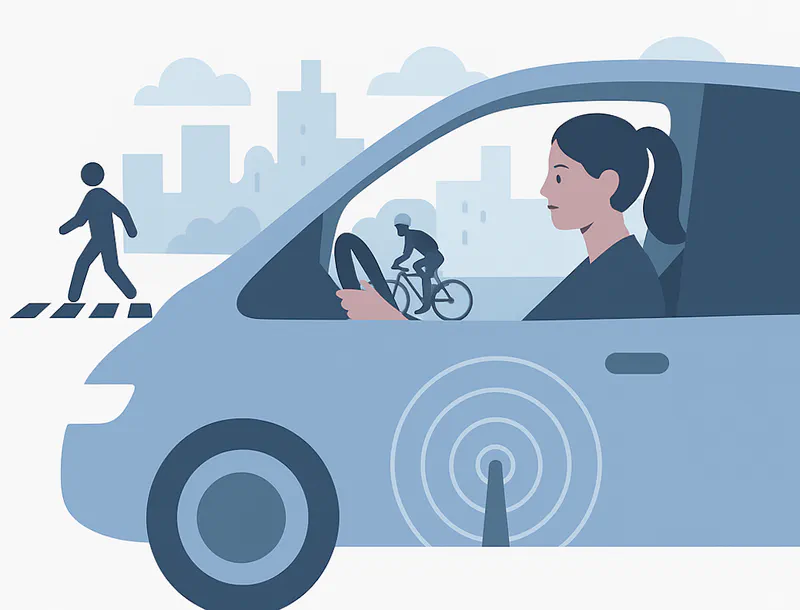

Driving Behavior & Streetscape

Develop safer, context-aware, and human-centered autonomous and assisted driving systems through research on driver attentiveness, pedestrian behavior, and multimodal streetscape cues.

- Driver Attentiveness, Distraction & Cognitive Load Studies

- Pedestrian & Cyclist Streetscape Behavior Modeling

- Visual, Audio & Spatial Cues for Human Intention Modeling

- Streetscape Simulation & Synthetic Data Generation

- Cognitive Insights for Driver–Vehicle Interaction

- Evaluation Frameworks aimed at Ecological Validity

Cognitive Media Analytics

Decode audience behavior, attention, and interpretation of media through advanced visuospatial and cognitive analysis methods.

- Audience-Centric & Semiotic Media Analysis

- Perception and Anticipation Modeling

- Event Segmentation & Interaction Modalities

- Attentional Synchrony & Visual Attention Mapping

- Edits, Composition & Aesthetics Analysis

- Multimodal Scene Structure and Elements Capture

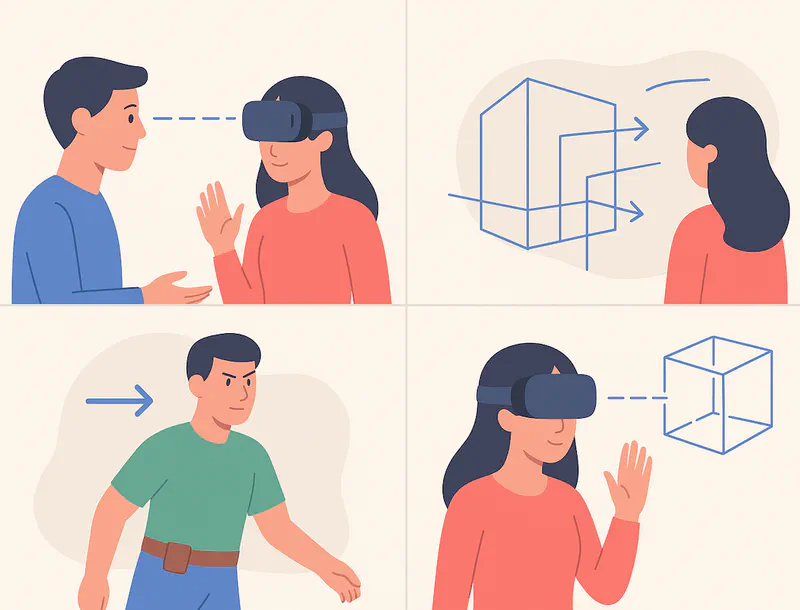

Animacy & Virtual Environments

Design immersive and behaviorally realistic virtual environments for gaming, simulation, training, and human–AI avatar interaction.

- Visual, Auditory & Spatial Interaction Cue Design

- Nonverbal Behavior & Attention Cue Modeling

- Realistic Social Cue (Gaze, Gesture) Animation Systems

- Embodied Design for Immersion (Game & Simulation)

- Cognitive Insights on Wayfinding & Navigational Cues

- Task-Specific & Adaptive Interaction Design

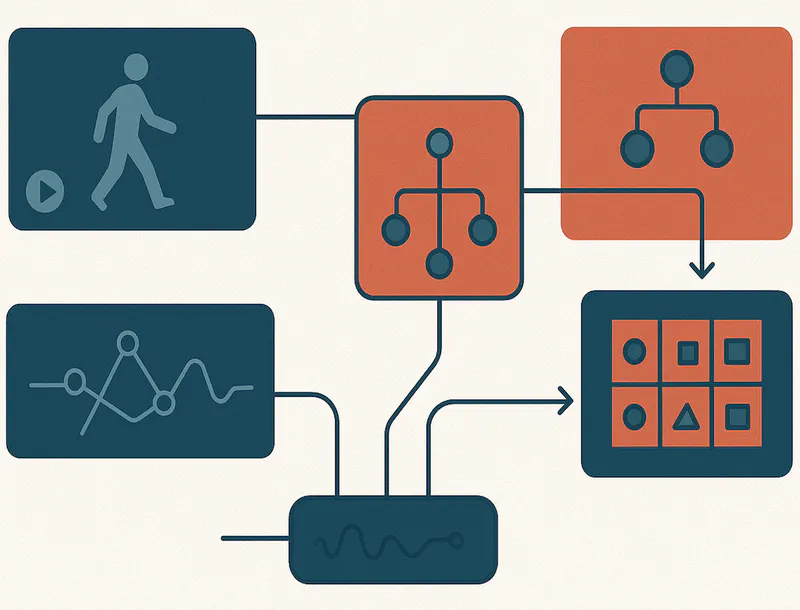

Human Event Recognition

Design, deploy, and optimize systems that automatically recognize, track, and interpret human actions and events from video and multimodal data — enabling context-aware computing.

- Human Action / Event Recognition & Retrieval

- Human-Human / Human-Object Interaction Recognition

- Human Event Knowledge Module Development

- Context-Specific Scene Understanding

- Multimodal Data Fusion for Robust Recognition

- Performance Benchmarking & Testing

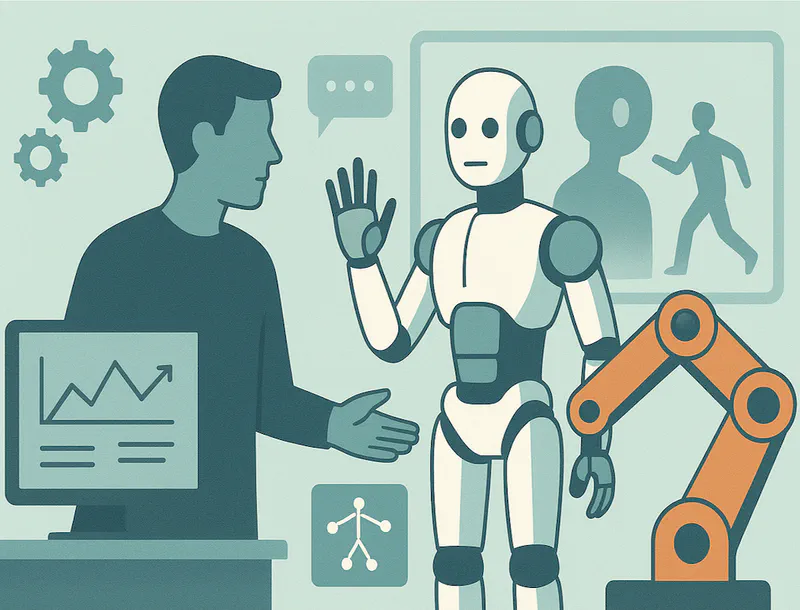

Cognitive & Social Robotics

Design, test, and deploy humanoid and social robots by leveraging human-centered, cognitive, and multimodal behavioral insights.

- Human-(Human)Object Interaction & Scene Understanding

- Multimodal Human Behavior Integration

- Social Expectation & UX Evaluation Frameworks

- Motion, Gesture & Gaze Emulation

- Dialogue (Turn-Taking) Management

- Engagement, Emotion & Intention Recognition

Behavioral Data Analytics

Preprocessing, annotation, and analysis of human behavioral data (video, sensors, multimodal streams) that generate actionable insights for design, performance, and decision-making.

- Data Pipeline Design, Preprocessing & Multimodal Integration

- Annotation Frameworks & Human Behavior Coding Systems

- Controlled Vocabulary, Taxonomy & Ontology Development

- Actionable Metric Extraction & Interaction Performance Analytics

- Annotation Tooling, Automation & Scalable Behavioral Databases

- Validation, Quality Assurance & Benchmarking