Modeling Visual Attention in Naturalistic Human Interactions

Abstract

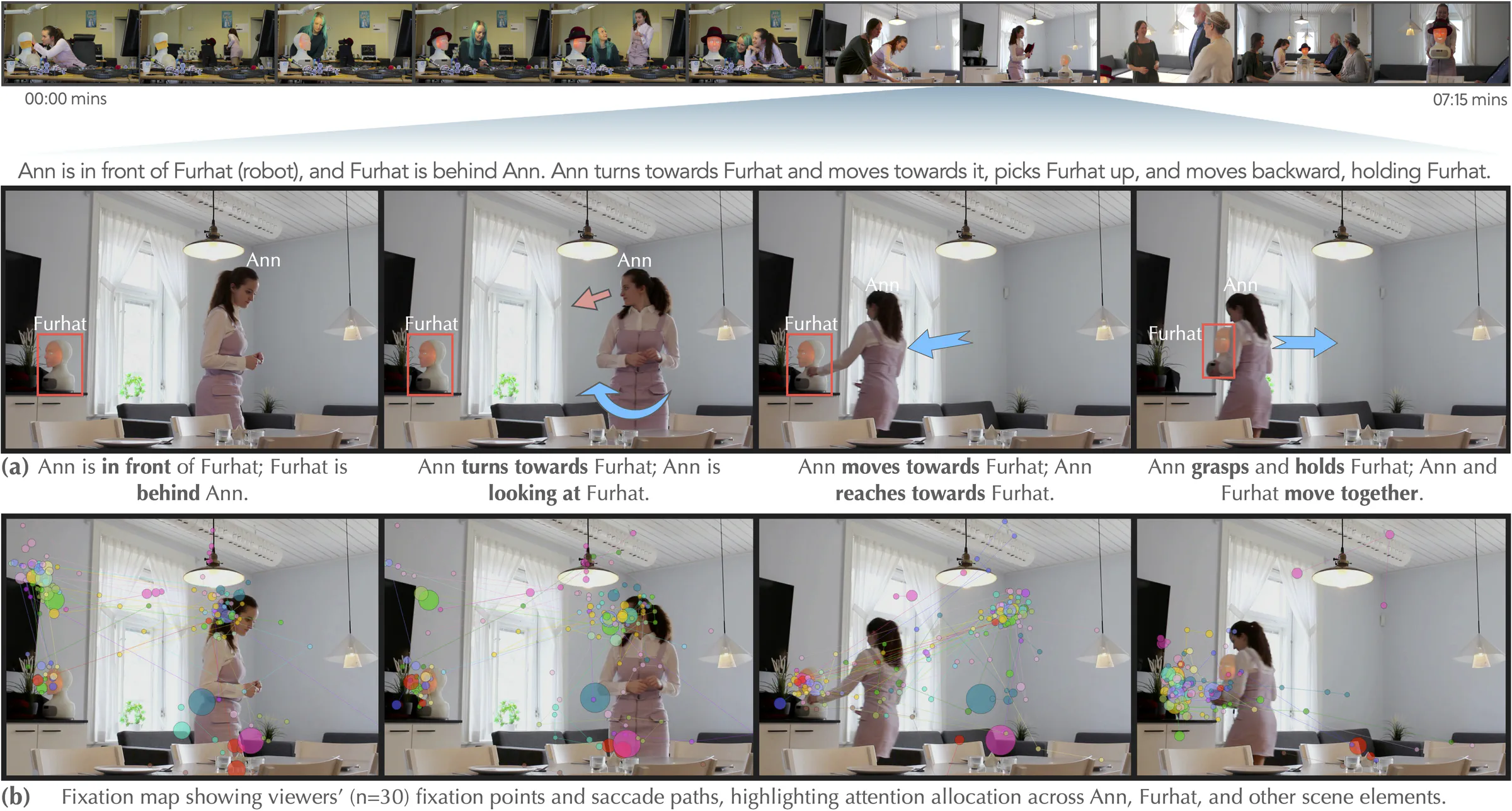

Our research investigates how visuoauditory cues— such as gaze, speech, motion, hand actions, and exit/entry —guide visual attention during passive observation of everyday human interactions. Using a structured event model and eye-tracking data from 90 participants across 27 acted interaction scenarios, we analyze how these cues individually and collectively influence where people look. The findings reveal strong intra- and cross-modal attentional effects, offering new insights into the dynamics of attention in real-world contexts. This framework provides a robust method for studying human attention vis-à-vis human interaction and has broad applications in human-centered design, cognitive modeling, and interactive systems.

Type

Publication

TBA