A Naturalistic Embodied Human Multimodal Interaction Dataset: Systematically Annotated Behavioural Visuo-Auditory Cue and Attention Data

May 1, 2025·

,

,

,

,

,

,

,

,

Vipul Nair

Mehul Bhatt

Jakob Suchan

Erik Billing

Paul Hemeren

Abstract

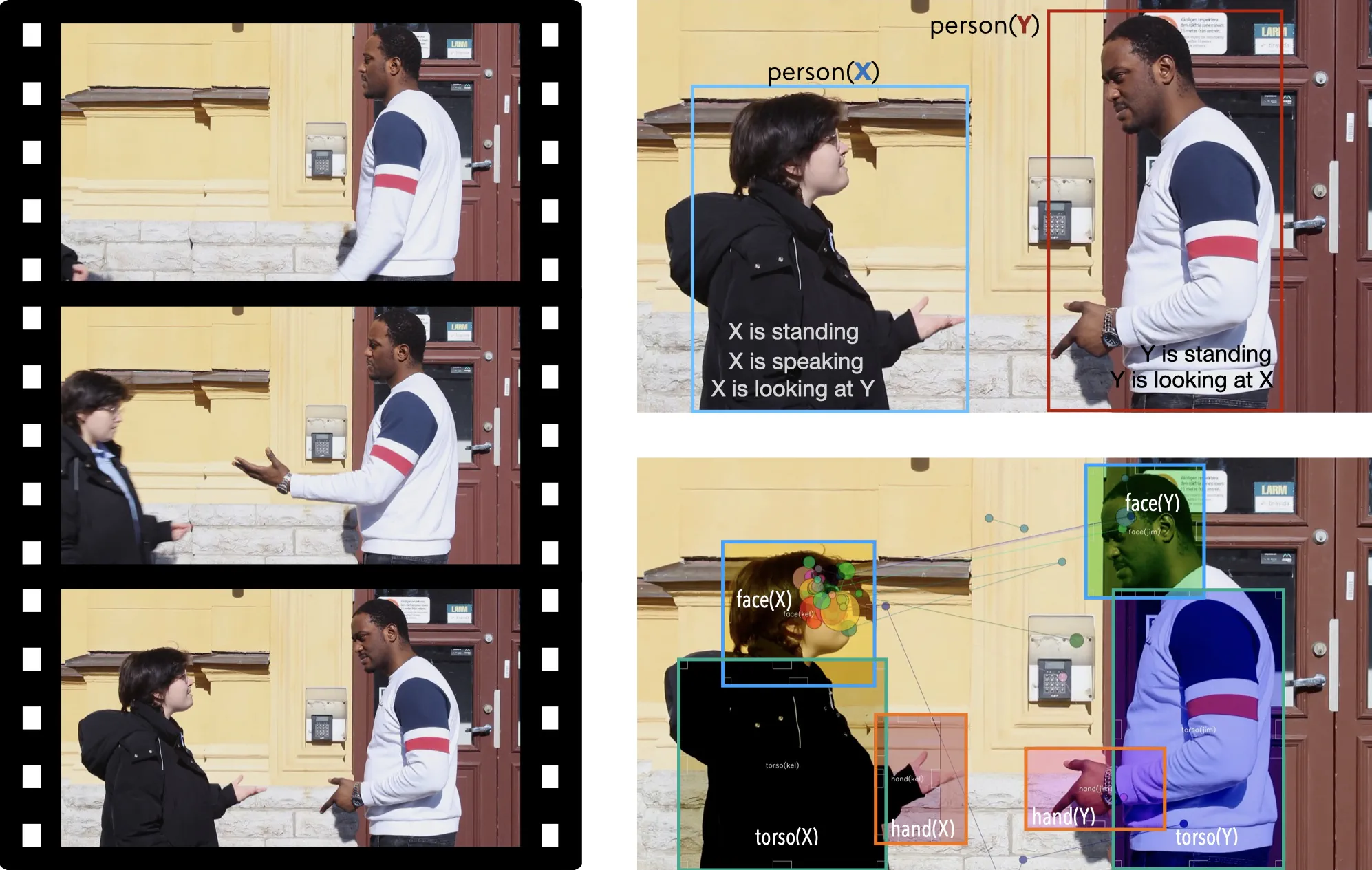

We present a richly annotated dataset designed to capture the complexity of real-world human interactions. It features 27 short event-based scenes across 9 everyday acted-contexts, acted by 32 individuals and annotated with detailed visuo-spatial and auditory cues. Each scenario includes eye-tracking data from 90 viewers and frame-by-frame annotations of body parts, actions, and visual attention. This multimodal dataset bridges the gap between controlled lab studies and real-life behavior, making it ideal for both behavioral research and computational modeling. It supports work in human-human interaction, attention modeling, and cognitive media studies by offering a high-resolution look at how people attend to in everyday events.

Type

Publication

PsyArXiv Preprint (June 2025)