VR Visual Search

Overview

Feasibility Study of Oculus Rift Device in Visual Search Experiments

📅 Jan 2015 – Apr 2015

🏛️ Attention, Perception & Emotion Lab, Center for Cognitive Science, IIT Gandhinagar

🎓 Supervised by Assoc. Prof. M. M. Sunny

This project conducted a behavioral feasibility study to determine whether Oculus Rift DK2 could reliably simulate depth cues for visual search experiments, specifically investigating size asymmetry effects in 3D environments.

Visual search tasks are a foundational method in cognitive psychology, used to investigate how we detect, process, and respond to visual information. While traditionally done in 2D setups, there’s growing interest in using Virtual Reality to enhance ecological validity.

Research Objectives

- Assess the feasibility of using Oculus Rift DK2 for behavioral experiments

- Examine participants’ interpretation of depth and size asymmetry – Asymmetry in searching “large among small” vs. “small among large” spheres

- Evaluate depth perception accuracy using stereoscopic rendering with controlled z-axis variations (Front: Z=20, Back: Z=25)

Can consumer VR hardware (Oculus DK2) accurately manipulate depth perception to replicate classical asymmetry effects (Wolfe, 2001) in visual search?

Methodology

- Replicated Wolfe’s asymmetry paradigm with custom 3D environment

- Software: Vizard 5.0 (Python-based) on Dell Studio 1555

- Experimental Design:

- 2×2×2 factorial design:**

- Size (Large[8,8,8] vs. Small[6,6,6] spheres)

- Depth (Front vs. Back)

- Target (Present vs. Absent)

- Blocked presentation with counterbalancing

- Apparatus:

- Oculus DK2 (960×1080@60Hz) with Lens A/B for vision correction

- Keyboard response mapping (← absent, → present)

- Metrics: Reaction Time (RT) & Accuracy

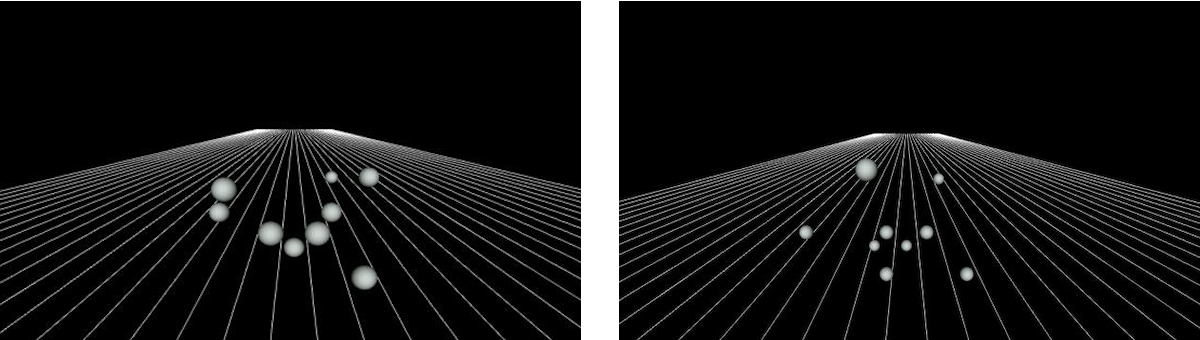

Stimulus display with vanishing point lines on the backdrop to simulate depth in Oculus Rift DK2 visual search task.

Key Results

- Oculus DK2 enabled depth manipulation but with limitations:

• Peripheral distortion (fish-eye lens)

• Occlusion issues between depth planes

• Backdrop-induced depth ambiguity - No significant effects for:

• Size: F(1,10)=1.118, p=0.315

• Depth: F(1,10)=0.032, p=0.861

• Size×Depth: F(1,10)=0.041, p=0.843 - Overall accuracy: 77% in target detection

- Participant feedback:

• Depth perception influenced by y-axis position

• Discoloration at display corners

• Critical need for pre-trial acclimation

Technical Highlights

- Vizard 5.0 experimental pipeline

- Stimulus Design:

• 9-sphere arrays with anti-occlusion logic

• Vanishing-point backdrop (depth cue simulation)

• Fixed coordinate ranges for lens distortion compensation - Hardware Optimization:

• Lens customization for vision correction

• Keyboard modification for blind operation

• Nausea screening protocol

Outcomes & Impact

Demonstrated both promise and limitations of consumer VR for rigorous perceptual research under controlled conditions.

- Identified key constraints for VR visual search:

• Prior HMD experience required

• 23% error rate from depth ambiguities

• Hardware-induced perceptual artifacts - Proposed design improvements:

• Side-wall depth cues (vs. central vanishing point)

• Higher refresh rates (>75Hz)

• Extended acclimation protocols - Established baseline methodology for VR psychophysics

Future Directions

- Test more complex cognitive tasks

- Integrate eye-tracking

- Cross-device validation with newer HMDs

- Build open-source toolkit for VR experiments

- Address occlusion/backdrop issues

Key Reference

Wolfe, J. M. (2001). “Asymmetries in visual search: An introduction.” Perception & Psychophysics, 63(3), 381–389.

Collaboration Opportunities

Open to collaboration or discussion on methodology, or future directions. Happy to exchange ideas and explore new perspectives.