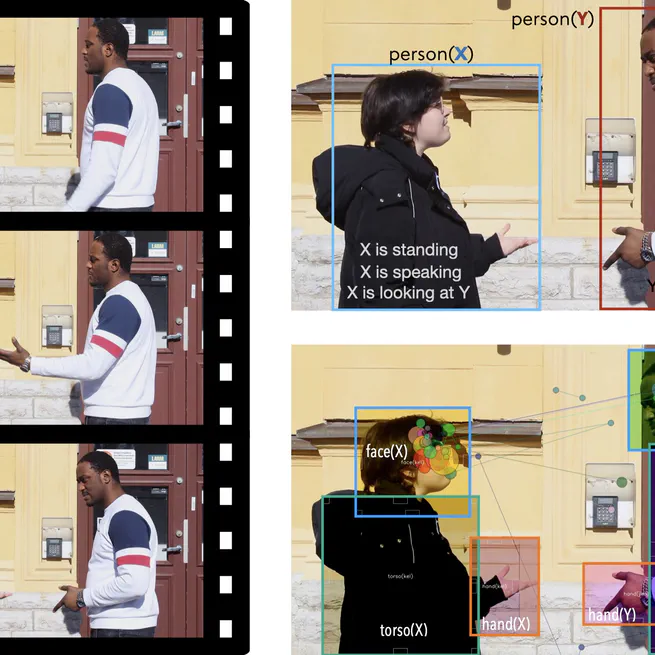

This naturalistic multimodal dataset captures 27 everyday interaction scenarios with detailed visuo-spatial & auditory cue annotations and eye-tracking data, offering rich insights for research in human interaction, attention modeling, and cognitive media studies.

May 1, 2025

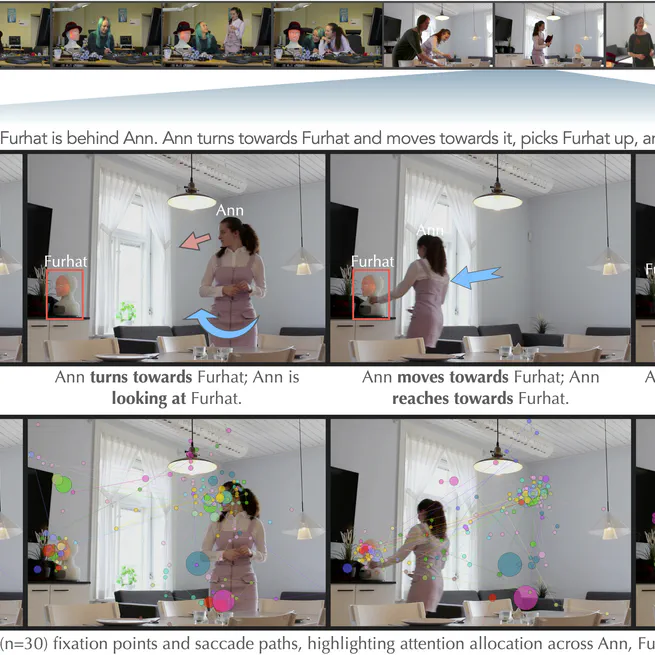

We explore how cues like gaze, speech, and motion guide visual attention in observing everyday interactions, revealing cross-modal patterns through eye-tracking data and structured event analysis.

January 30, 2025

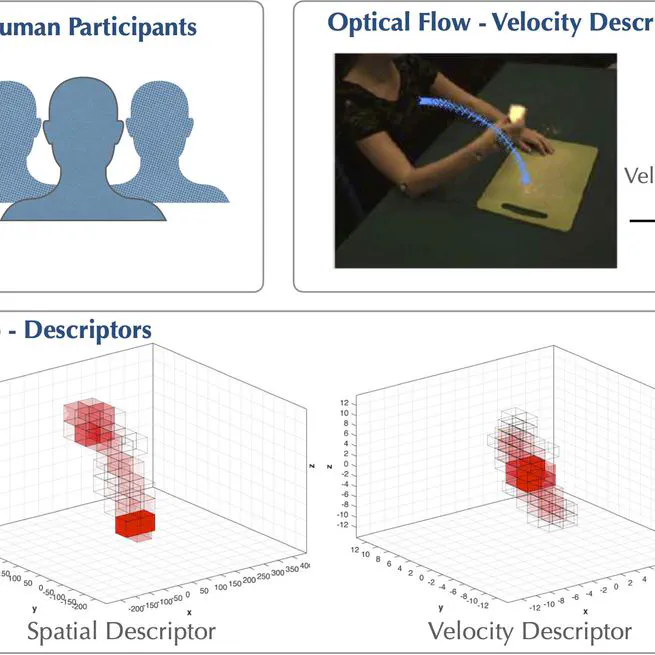

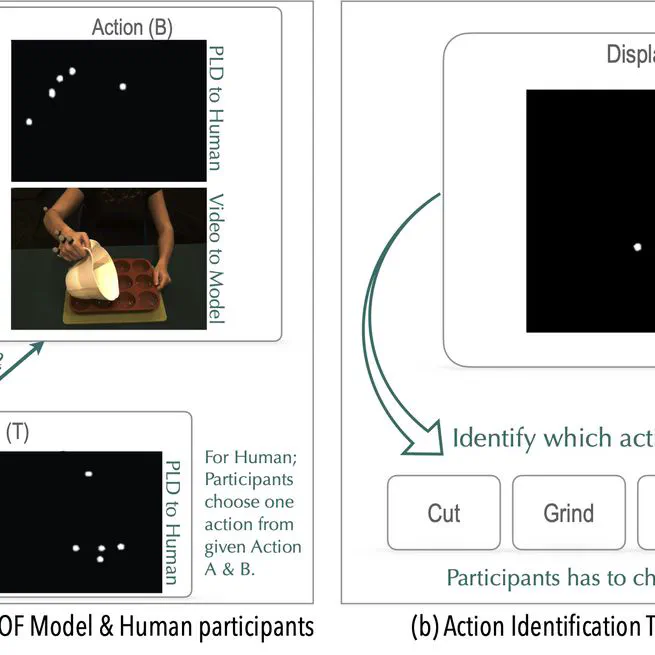

This study compares human and computational judgments of action similarity, revealing that both rely heavily on kinematic features like velocity and spatial cues and that humans don't rely much on action semantics.

January 30, 2023

This study compares human judgments and a kinematics-based computational model in recognizing action similarity, revealing that both rely heavily on low-level kinematic features, with the model showing higher sensitivity but also greater bias.

October 30, 2020

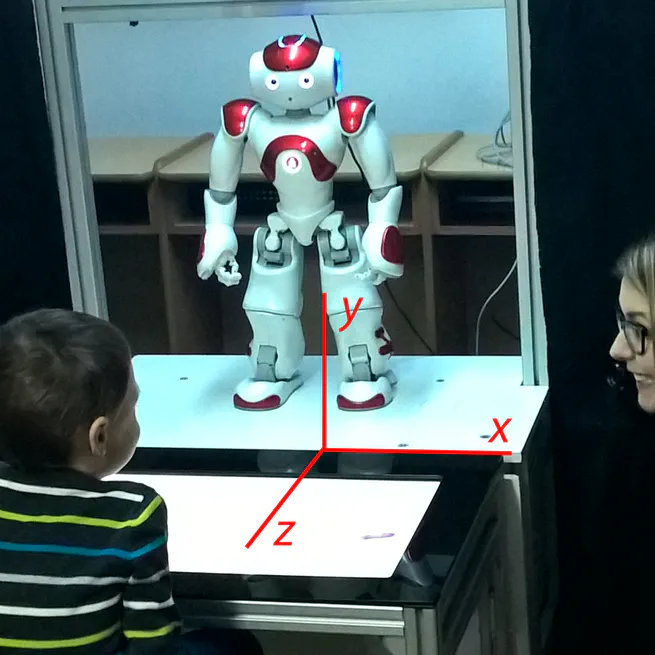

This dataset captures 300+ hours of therapy sessions with 61 children with ASD, offering rich 3D behavioral data—including motion, gaze, and head orientation—from both robot-assisted and therapist-led interventions.

August 21, 2020